Openai

2025

LLM Basics: OpenAI Function Calling

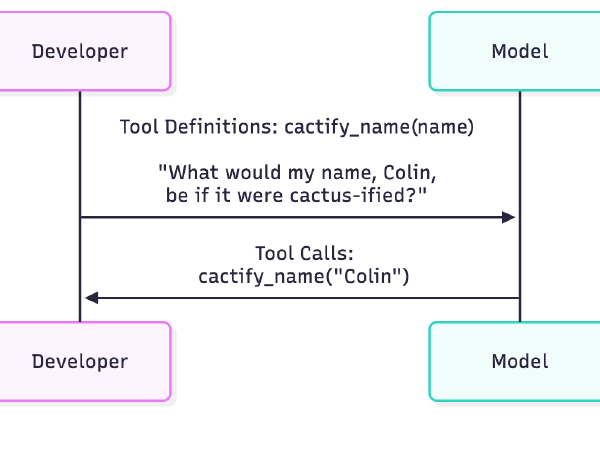

In our previous post, we explored how to send text to an LLM and receive a text response in return. That is useful for chatbots, but often we need to integrate LLMs with other systems. We may want the model to query a database, call an external API, or perform calculations.

Learning LLM Basics with OpenAI

For some time now, we’ve been using tools like ChatGPT and CoPilot for day-to-day tasks, but mostly through the conversational AI chatbots they provide. We’ve used them for everything from drafting emails to providing type-ahead coding assistance. We haven’t, however, gone a step further and integrated them into a development project. Honestly, we weren’t sure where to start. Looking into the available options, you quickly run into a dozen new concepts, from vector stores to agents, and different SDKs that all seem to solve similar problems.